LLMWare

LLMWare offers secure, local deployment of small language models for enterprises to automate and enhance productivity in compliance-heavy sectors.

LLMWare Introduction

LLMWare is a game-changer for enterprises dealing with data-sensitive tasks like information retrieval, contract reviews, and SQL queries. This tool leverages small language models (SLMs) that work like efficient, specialized assistants tailored specifically for sectors such as finance, law, and compliance. One standout feature is its local deployment; users can run it privately on their laptops or within their organization’s secure infrastructure, significantly reducing the risk of data breaches. Imagine having a smart, capable assistant that doesn’t need to leave the room to fetch you the right information or automate mundane tasks. The robust support for various vector databases like FAISS and MongoDB Atlas means integration is a breeze. LLMWare’s open-source nature and extensive documentation, including video tutorials, make it accessible even to those relatively new to AI. For enterprise developers looking to boost productivity while maintaining data security, LLMWare is a solid choice without making everything overly technical or complicated.

LLMWare Key Features

Local Deployment

Run AI models directly on laptops or private cloud setups, ensuring data privacy and reducing the risk of data breaches. Perfect for data-sensitive corporate environments.

Small Language Models

Specifically designed to be lightweight and efficient, these models can be deployed locally without needing external cloud services, preserving both performance and security.

End-to-End Solution

Offers a comprehensive framework from development to deployment, including tools for AI Agent workflows and Retrieval Augmented Generation (RAG), all tailored for enterprise use.

Extensive Model Library

Access to over 75+ models on Hugging Face and 100+ open-source examples, providing a robust starting point for various enterprise applications such as contract reviews and SQL query generation.

Easy Integration

Seamlessly integrates with major vector databases like FAISS, Milvus, and Pinecone, allowing for production-grade embedding capabilities and enhancing the overall performance of AI applications.

LLMWare Use Cases

Automated Contract Review for Legal Teams:Legal teams can leverage LLMWare's small language models to automate contract reviews, reducing the time spent on repetitive tasks and minimizing human errors, ultimately enhancing productivity and allowing lawyers to focus on complex legal issues.

Data Retrieval in Financial Services:Financial analysts can use LLMWare to quickly retrieve critical data points from vast databases using locally deployed AI, ensuring data security and privacy while accelerating the decision-making process with accurate and fast information access.

Compliance Reporting for Regulatory Industries:Compliance officers in regulatory-intensive industries can generate detailed compliance reports effortlessly by deploying LLMWare's specialized models on their local systems, streamlining the reporting process and maintaining strict data confidentiality.

Enhanced Productivity for Developers:Enterprise developers can create lightweight, locally deployed AI apps with LLMWare to automate workflows such as SQL queries and report generation, significantly boosting productivity and reducing manual, error-prone tasks.

Customer Service Optimization in Enterprises:Customer service departments can utilize LLMWare’s AI agent workflows to automate responses to common queries, enhancing efficiency and allowing human agents to handle more complex customer issues, ultimately improving customer satisfaction.

LLMWare User Guides

Step 1: Install LLMWare by running pip install llmware in your command line.

Step 2: Explore the 100+ examples in the open-source repo to understand implementation.

Step 3: Choose a pre-built specialized model from Hugging Face or fine-tune your own.

Step 4: Deploy the model locally on your laptop or private cloud to ensure data security.

Step 5: Integrate with vector databases like FAISS, Redis, or MongoDB for data embedding.

LLMWare Frequently Asked Questions

LLMWare Website Analytics

- United Kingdom50.6%

- India49.4%

LLMWare Alternatives

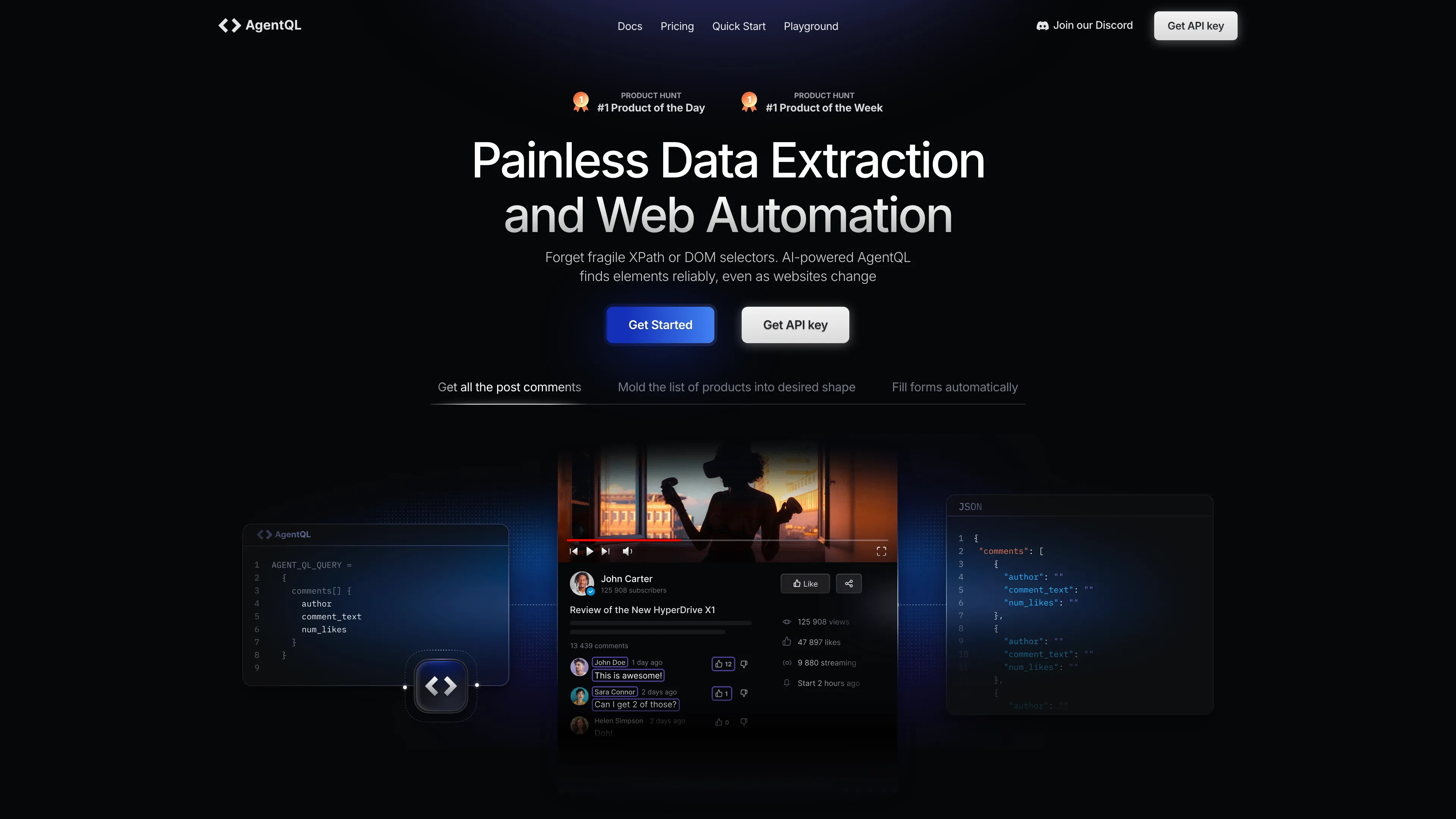

AgentQL is an AI-powered tool for robust data extraction and web automation, using natural language for reliable element identification, even as websites change.

AIpex is an intelligent Chrome extension that organizes and manages tabs with AI-powered grouping and smart search features, enhancing your productivity effortlessly.

AI Product Shot enables brands to create stunning, studio-quality product images without physical setups, offering unique, photorealistic results that drive sales.

Allapi.ai offers seamless API integration, simplifying complex processes for developers and enhancing productivity with user-friendly tools.

AnonAI: A private AI assistant like ChatGPT with no tracking, no logins, and local data storage, powered by top open-source AI models.

Augment UI uses AI to quickly prototype frontend designs, allowing you to generate and edit code directly in the browser for seamless development.