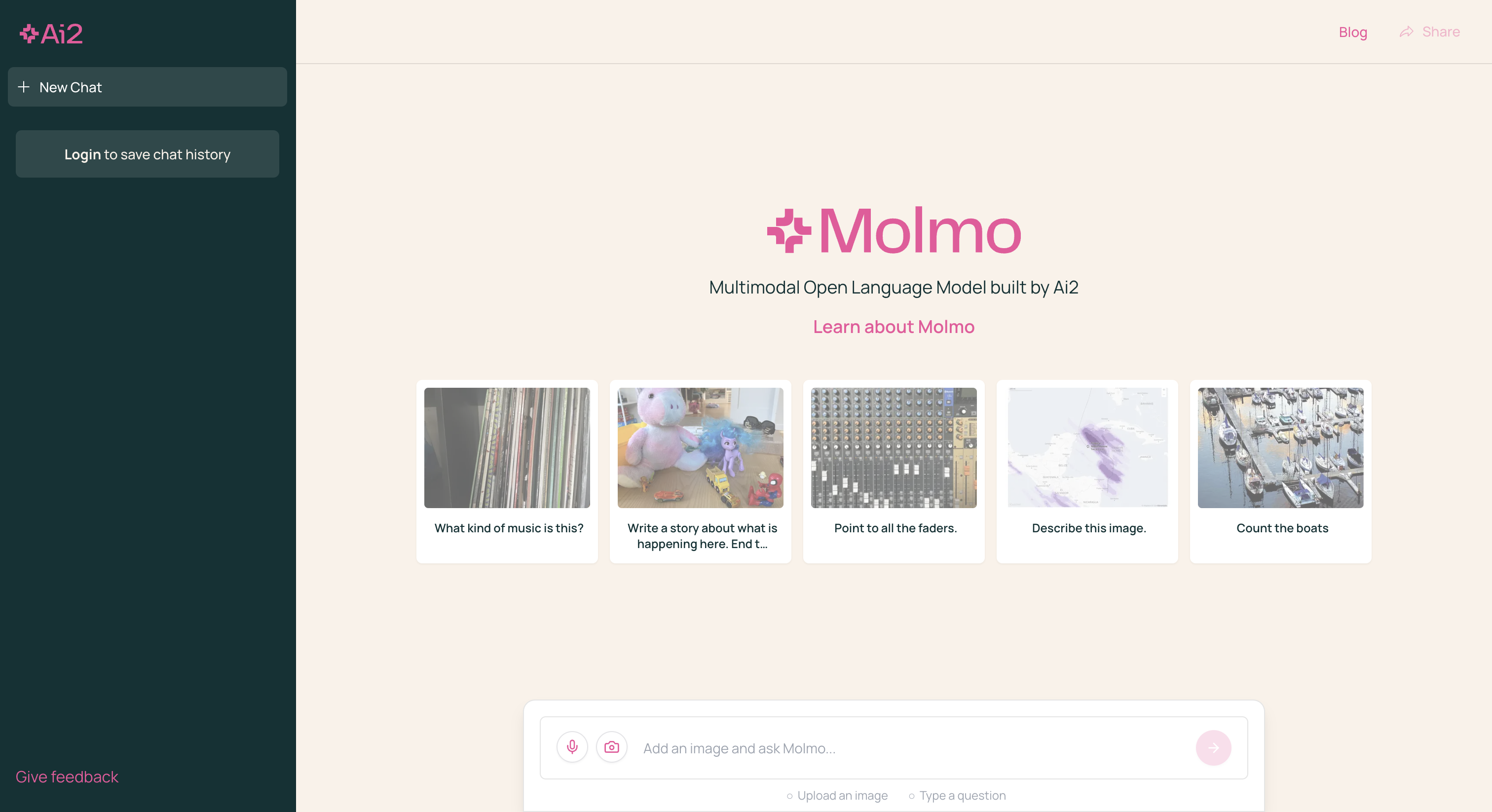

MolmoAI by Ai2

Molmo is an open multimodal AI model family enabling interaction with virtual and physical environments through detailed human-annotated datasets.

MolmoAI by Ai2 Introduction

Molmo is an innovative multimodal AI model family designed to interact with the digital and physical worlds. By using a robust vision encoder and a language model, it enables diverse applications such as image captioning and virtual interactions. Unlike traditional models that rely on vast amounts of often noisy data, Molmo focuses on high-quality datasets, ensuring clearer, more accurate outputs. Its ability to 'point' to elements in an image enhances interaction, much like pointing in real life simplifies communication. This approach not only advances academic benchmarks but also opens opportunities for more intuitive AI integration in everyday technology, making it a game-changer in the AI field.

MolmoAI by Ai2 Key Features

Multimodal Interaction

Molmo models empower AI to not just interpret but also interact with both physical and virtual environments by using innovative pointing mechanisms, enhancing user experience beyond simple language responses.

Open and Transparent Architecture

Built on open weights, data, and code, Molmo champions transparent AI innovation, leveraging human-generated data for superior VLM performance without relying on proprietary systems.

Quality over Quantity: PixMo Data

Molmo's training data emphasizes quality through detailed human audio descriptions, enabling the AI to understand images deeply and provide accurate, nuanced responses with less data noise.

Diverse Application Scenarios

With capabilities like pointing, question answering, and document reading, Molmo excels in applications requiring visual explanations, improving tasks from web navigation to robotic guidance.

Human-Centric Evaluation

Through large-scale human preference rankings, Molmo ensures its models align closely with user expectations, offering performance that meets both academic benchmarks and practical human evaluations.

MolmoAI by Ai2 Use Cases

Digital Art Curation: Molmo enables digital artists to create interactive galleries. Using its vision-language capabilities, artists transform static images into immersive narratives, enriching viewer experiences.

Virtual Education Enhancement: Teachers leverage Molmo's pointing feature to guide students through complex diagrams. The ability to highlight and describe intricate details aids comprehension in online education.

AR Navigation Guidance: Molmo supports augmented reality by interpreting environments and providing navigational cues. Users enjoy seamless, interactive navigation, enhancing travel and exploration experiences.

Accessible Information: Molmo assists visually impaired users by reading documents and images aloud, while pointing to essential elements. This feature ensures inclusivity, making information accessible.

Smart Home Interaction: Home assistants utilize Molmo to recognize and execute visual commands. By identifying objects and receiving verbal instructions, it enhances home automation, offering convenience and efficiency.

MolmoAI by Ai2 User Guides

Step 1: Access the Molmo Demo via the provided website link.

Step 2: Upload or select an image to begin interaction with Molmo.

Step 3: Use the pointing feature to highlight objects in the image.

Step 4: Ask questions about the image for detailed responses.

Step 5: Explore more advanced features like OCR for document reading.

MolmoAI by Ai2 Frequently Asked Questions

MolmoAI by Ai2 Website Analytics

- United States27.8%

- China6.8%

- India6.6%

- Vietnam5.8%

- United Kingdom4.3%

MolmoAI by Ai2 Alternatives

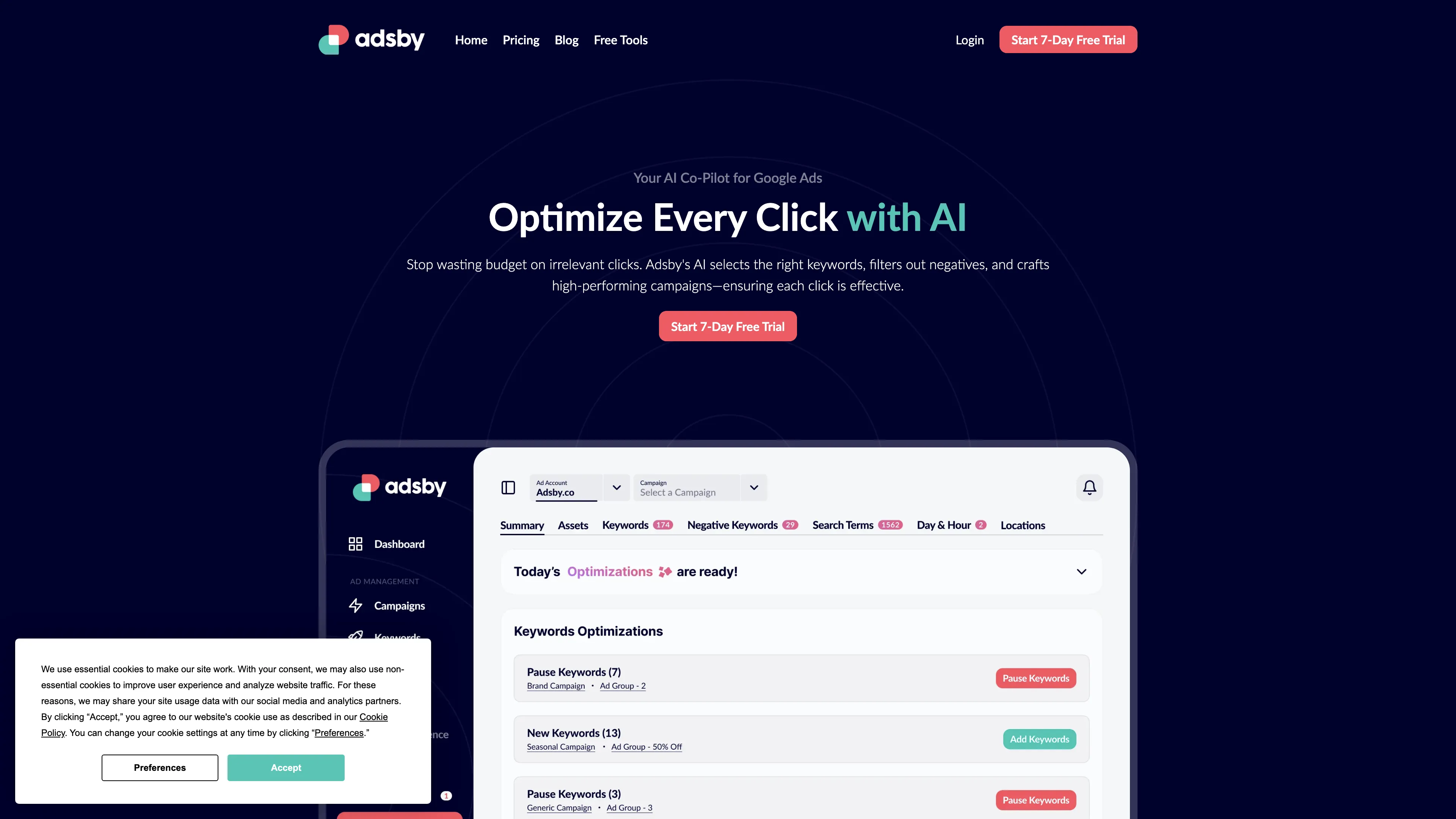

Adsby optimizes Google Ads with AI, maximizing Return on Ad Spend by choosing precise keywords and crafting efficient ad campaigns swiftly.

AI PDF Summarizer instantly creates concise PDF overviews, enhances productivity with multilingual support, and ensures data security online.

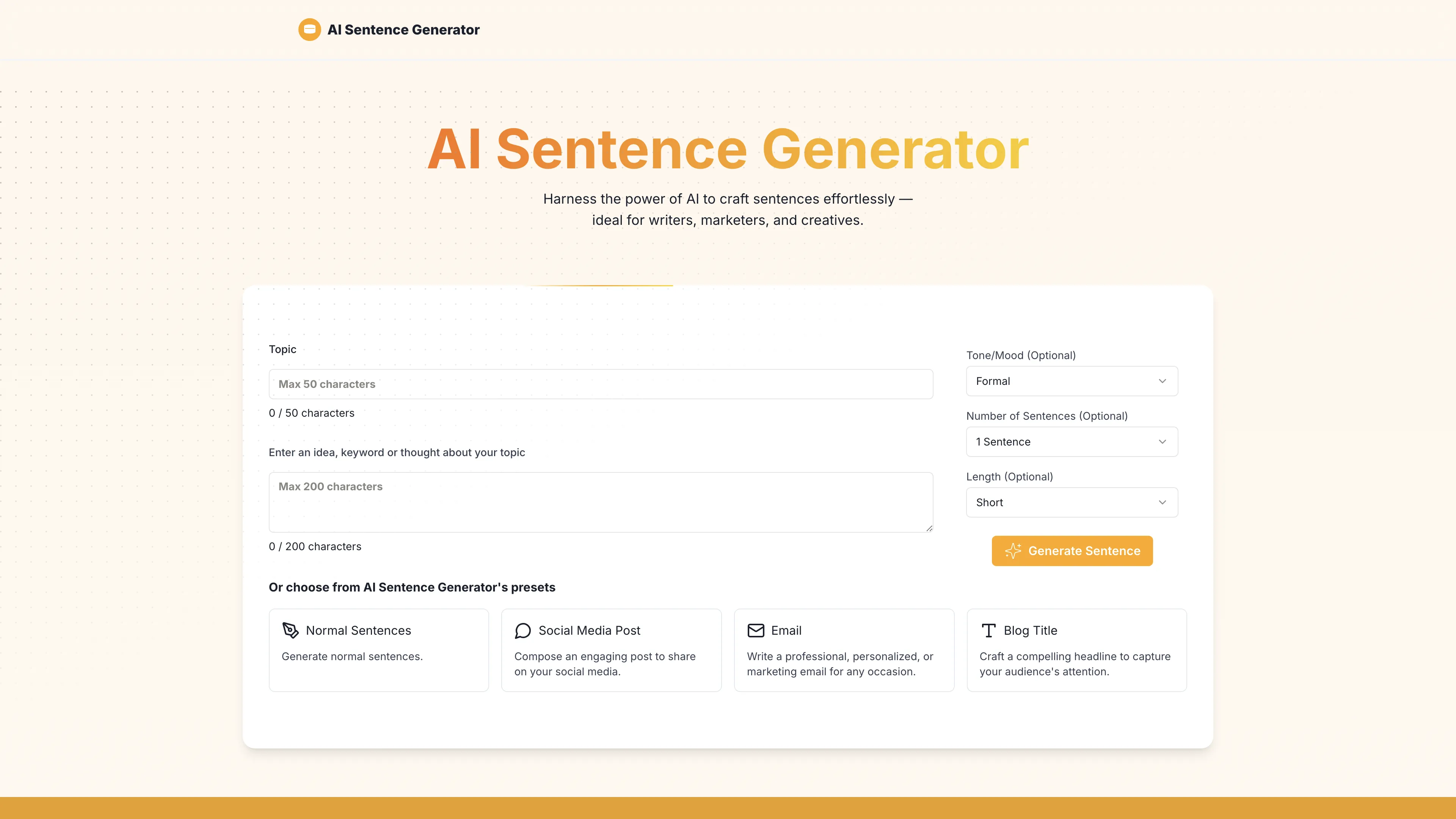

AI Sentence Generator creates tailored sentences quickly for content creators, marketers, and more, improving productivity with ease and accuracy.

AI Summarizer provides free, precise summaries of articles and texts, preserving context and supporting multiple languages for efficient information processing.

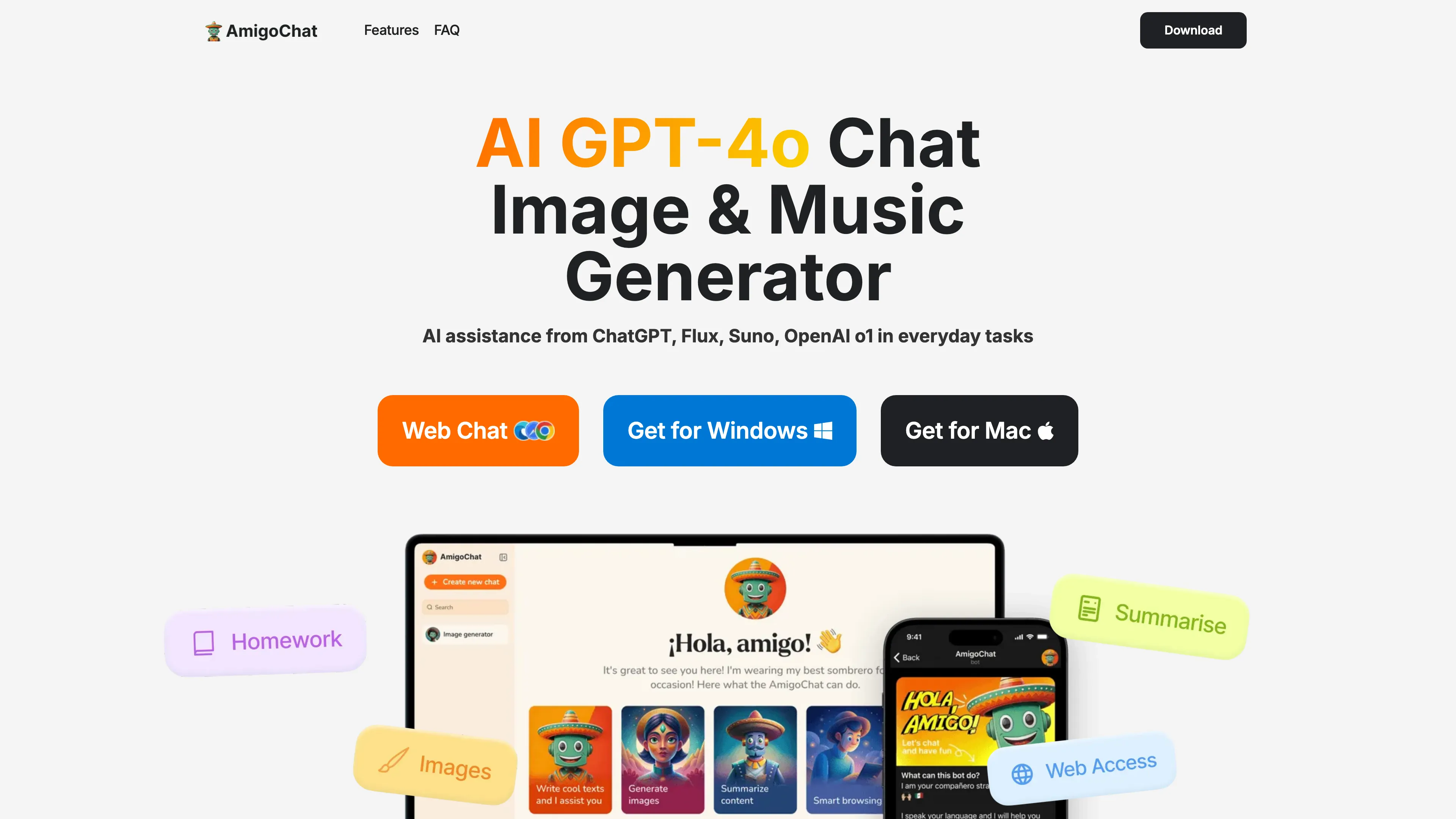

AmigoChat offers AI-powered friendly conversations, creative content generation, and secure data handling, making it your versatile digital companion.

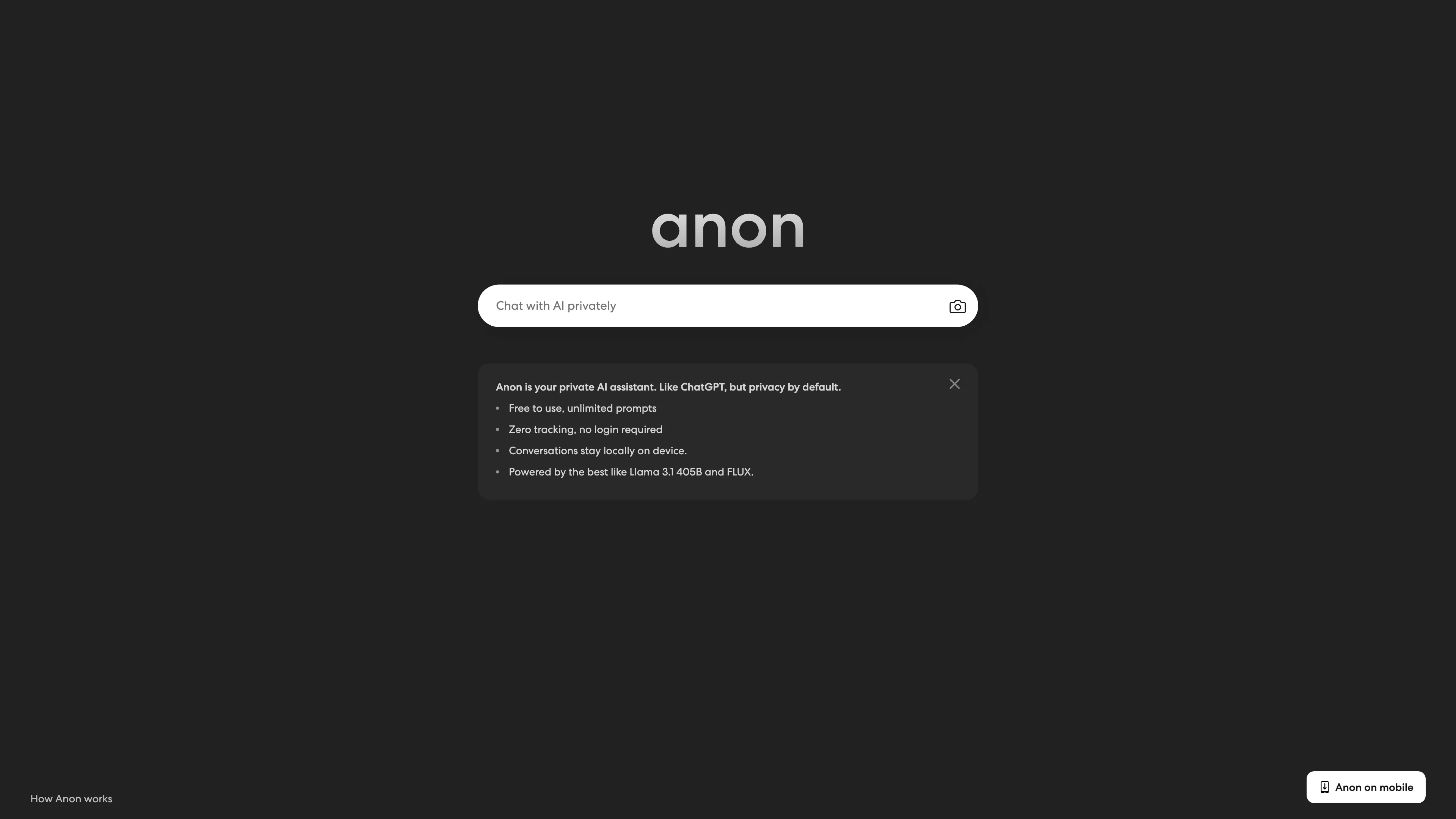

AnonAI: A private AI assistant like ChatGPT with no tracking, no logins, and local data storage, powered by top open-source AI models.